How to Change Your Assessments In the AI Age

So, everyone is cheating their way through college? But, get this...we aren't going to change college teaching and learning!

Imagine your favorite team losing at halftime, instead of making adjustments, those coaches stay the course.

Now imagine that team having an entire offseason, after the rules changed in the game, and yet those coaches stay the course.

Now three offseasons, no real changes made.

What's worse is that you see that other team over there, and the coaches have made all kinds of changes. Some have worked, others haven't, but at least they are acknowledging this simple fact: We need to adjust to a new game.

This isn’t only happening at universities, it is also K-12. We can’t stay the course on assessing only final products, while missing the assessment during the learning process.

Conversations About Learning

Assessments are a conversation about learning.

The best assessments give us a snapshot into a learners knowledge acquisition and understanding. They also give learners an opportunity to demonstrate their skills and development in targeted areas.

This is why so many people, me included, are a bit worried about AI’s role in assessment practices.

Thankfully, a group of professors have been spending a lot of time “changing their game” when it comes to AI and assessment over the last three years. They’ve done research, created a new framework, and then revised that protocol with feedback and additional studies. Here’s everything you need to know.

The AI Assessment Scale (The AIAS)

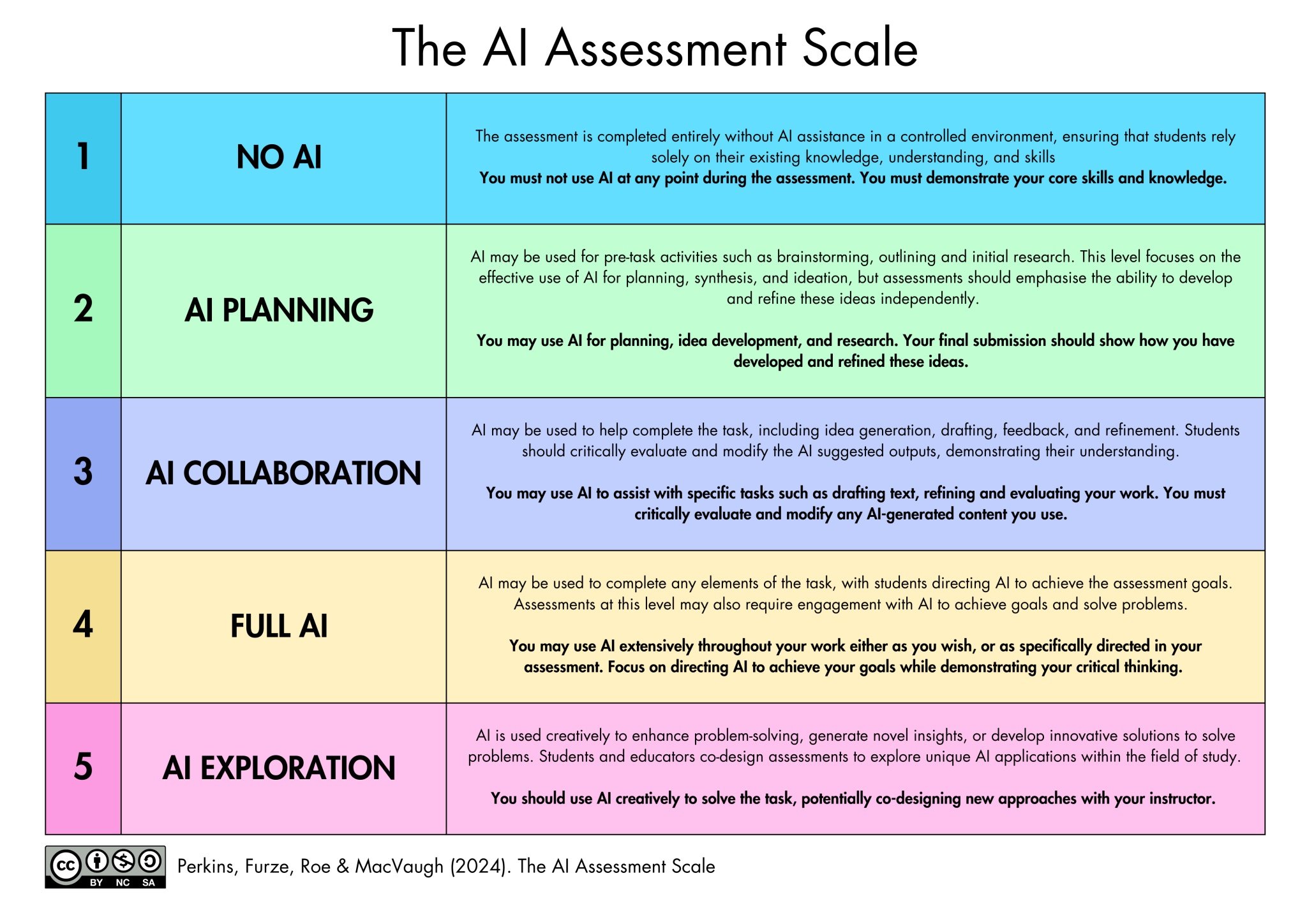

The AI Assessment Scale (AIAS) was developed by Mike Perkins, Leon Furze, Jasper Roe, and Jason MacVaugh. First introduced in 2023 and updated in Version 2 (2024), the Scale provides a nuanced framework for integrating AI into educational assessments.

The AIAS has been adopted by hundreds of schools and universities worldwide, translated into 29 languages, and is recognised by organisations such as the Australian Tertiary Education Quality and Standards Agency (TEQSA) as an effective way to implement GenAI into assessment.

On the AIAS Website they break down each step of the updated scale:

1. No AI

The assessment is completed entirely without AI assistance in a controlled environment, ensuring that students rely solely on their existing knowledge, understanding, and skills

You must not use AI at any point during the assessment. You must demonstrate your core skills and knowledge.

2. AI Planning

AI may be used for pre-task activities such as brainstorming, outlining and initial research. This level focuses on the effective use of AI for planning, synthesis, and ideation, but assessments should emphasise the ability to develop and refine these ideas independently.

You may use AI for planning, idea development, and research. Your final submission should show how you have developed and refined these ideas.

3. AI Collaboration

AI may be used to help complete the task, including idea generation, drafting, feedback, and refinement. Students should critically evaluate and modify the AI suggested outputs, demonstrating their understanding.

You may use AI to assist with specific tasks such as drafting text, refining and evaluating your work. You must critically evaluate and modify any AI-generated content you use.

4. Full AI

AI may be used to complete any elements of the task, with students directing AI to achieve the assessment goals. Assessments at this level may also require engagement with AI to achieve goals and solve problems.

You may use AI extensively throughout your work either as you wish, or as specifically directed in your assessment. Focus on directing AI to achieve your goals while demonstrating your critical thinking.

5. AI Exploration

AI is used creatively to enhance problem-solving, generate novel insights, or develop innovative solutions to solve problems. Students and educators co-design assessments to explore unique AI applications within the field of study.

You should use AI creatively to solve the task, potentially co-designing new approaches with your instructor.

Why This Matters

Previously I’ve shared about the Traffic Light Protocol, which is a great jumping off point for discussions on AI use in the classroom. However, the AIAS goes deeper into the realm of assessment.

When we start wondering how we can change the game, it ultimately goes back to assessment.

If we use the same assessments, it is difficult to change instruction.

If we use the same assessment practices, we’ll always be looking at AI as a possible cheating tool, instead of a tool (and skillset) that levels up learners ability to acquire knowledge and transfer those skills.

Interested in what updated assessments might look like? Leon Furze offers a free eBook with over 50 assessment ideas aligned to the AIAS when you join his mailing list (recommended reading!).

As the authors point out in a recent Journal article:

The AIAS empowers educators to select the appropriate level of GenAI usage in assessments based on the learning outcomes they seek to address. The AIAS offers greater clarity and transparency for students and educators, provides a fair and equitable policy tool for institutions to work with, and offers a nuanced approach which embraces the opportunities of GenAI while recognising that there are instances where such tools may not be pedagogically appropriate or necessary. By adopting a practical, flexible approach that can be implemented quickly, the AIAS can form a much-needed starting point to address the current uncertainty and anxiety regarding GenAI in education.

Now, let’s take this a step further for our own practice.

How can we change our assessments? Let’s look at some examples using the scale below:

1. No AI

Traditional Assessment:

Essay: In-class handwritten essay on “The Causes of the American Revolution.”

Science Project: Lab practical where students conduct an experiment and write observations on paper.

Math: Timed, in-class test solving algebraic equations.

AI-Adjusted Scenario:

Students complete all work in a controlled, AI-free environment (paper/pencil, no devices).

Focus: Demonstrate unaided knowledge and skills.

2. AI Planning

Traditional Assessment:

Essay: Essay on “Climate Change Solutions.”

Science Project: Research-based poster on renewable energy.

Math: Take-home problem set on geometry.

AI-Adjusted Scenario:

Essay: Students use AI to brainstorm ideas, outline their essay, and gather sources. The final essay is written independently, with a reflection on how AI shaped their planning.

Science Project: Students use AI to generate research questions and outline their poster but must gather data and design the poster themselves. Submission includes a planning log showing AI interactions.

Math: Students use AI to identify strategies or hints for solving the problems, but all calculations and reasoning are done by hand. They submit a brief note on how AI helped them plan their approach.

3. AI Collaboration

Traditional Assessment:

Essay: Literary analysis essay on a novel.

Science Project: Lab report on plant growth.

Math: Extended word problems requiring written explanations.

AI-Adjusted Scenario:

Essay: Students draft their essay, then use AI for feedback or to suggest improvements. They must revise the AI-generated draft, highlighting what they changed and why, demonstrating critical thinking.

Science Project: Students write their lab report, use AI to check for clarity or suggest improvements, and then revise. They annotate the report to show which sections were influenced by AI and how they critically evaluated suggestions.

Math: Students solve problems, then use AI to check their work or explain alternative solutions. They compare their approach to the AI’s and reflect on differences, showing their reasoning process.

4. Full AI

Traditional Assessment:

Essay: Research paper on a historical event.

Science Project: Design an experiment and analyze results.

Math: Complex, multi-step real-world application project.

AI-Adjusted Scenario:

Essay: Students use AI to research, draft, revise, and format the entire paper. The assessment focuses on how effectively students direct the AI, set parameters, and evaluate the final product. They submit a process log showing their prompts and decision-making.

Science Project: Students use AI to help design the experiment, simulate data, analyze results, and create visualizations. Assessment is based on how students direct the AI, interpret results, and justify their choices.

Math: Students use AI to model real-world scenarios (e.g., budgeting for a school event), generate solutions, and present findings. They are assessed on how they guide the AI, interpret outputs, and communicate results.

5. AI Exploration

Traditional Assessment:

Essay: Creative writing assignment.

Science Project: Open-ended inquiry project.

Math: Design your own math investigation.

AI-Adjusted Scenario:

Essay: Students co-design a creative writing task with the teacher, using AI to experiment with narrative styles, generate plot twists, or create interactive stories. They reflect on how AI expanded their creative process.

Science Project: Students and teacher co-create a project exploring AI’s role in scientific discovery (e.g., using AI to analyze citizen science data). Students document their process and present novel findings.

Math: Students use AI to explore unsolved math problems, generate conjectures, or visualize complex concepts. They work with the teacher to set goals and reflect on how AI changed their mathematical thinking.

Notice how the creative work and follow up defense of learning changes as you go into Levels 3, 4, and 5 of the AIAS.

This brings us back to our conversation about the inverted Bloom’s Taxonomy.

Dr. M. Workmon Larsen recently wrote a fantastic piece around the changes we are seeing in education and learning with the advent of generative AI. I urge you to read the entire article here, but she gets to the real crux of this issue when she discusses how AI is now impacting what we believed to be true with Bloom’s Taxonomy.

Traditional Bloom’s Taxonomy is a climb — learners start at the bottom, remembering and understanding concepts, before advancing to applying, analyzing, evaluating, and eventually creating. This made sense in a world where knowledge was considered more static or even a smidge more linear, but AI disrupts this model.

The gateway of learning in the age of AI is creation.

Starting with creation encourages learners to experiment, build, and test real-world ideas, sparking curiosity and uncovering the questions that drive deeper understanding. By engaging in creation, learners explore how tools work through hands-on experimentation and real-world application.

Learners begin by evaluating their outcomes, asking: What needs to change to improve this result? They then move to analyzing the problem: Why did this approach lead to this outcome? Next, they apply their insights to adjust and refine their work: What can I do differently next time? Through this process, learners build understanding by connecting their discoveries to broader concepts and systems. Finally, they remember key lessons and integrate them into future iterations.

Create is the entry point, not the pinnacle. It drives learners to evaluate the outcomes of their work, analyze what worked (and what did not), and apply what they’ve learned in new ways. This isn’t about skipping foundational knowledge — it’s about embedding that knowledge in meaningful action.

In Levels 3, 4, and 5 create is the entry point. Learners defend their knowledge, decisions, and transfer their understanding and skills to new authentic applications.

This may scare you. It sure looks different.

But, things have changed. We can’t roll out the same playbook year after year. It’s time to adjust, as we always have in education, and change our assessments in the AI age.